# Overview

```{admonition} The Acronym

MINDecoding = Movement Intent Decoding

```

This project contains training and testing software for movement intent decoders,

which convert biological signals, like EMG & EEG, to control signals for prosthetic limbs.

This software contains a computational process called the Backend,

as well as user interface called the GUI, which connects to Backend processes over sockets.

This repo is a continuation & upgrade of Sean Sylwester's Integrated System repo,

which is no longer in active development.

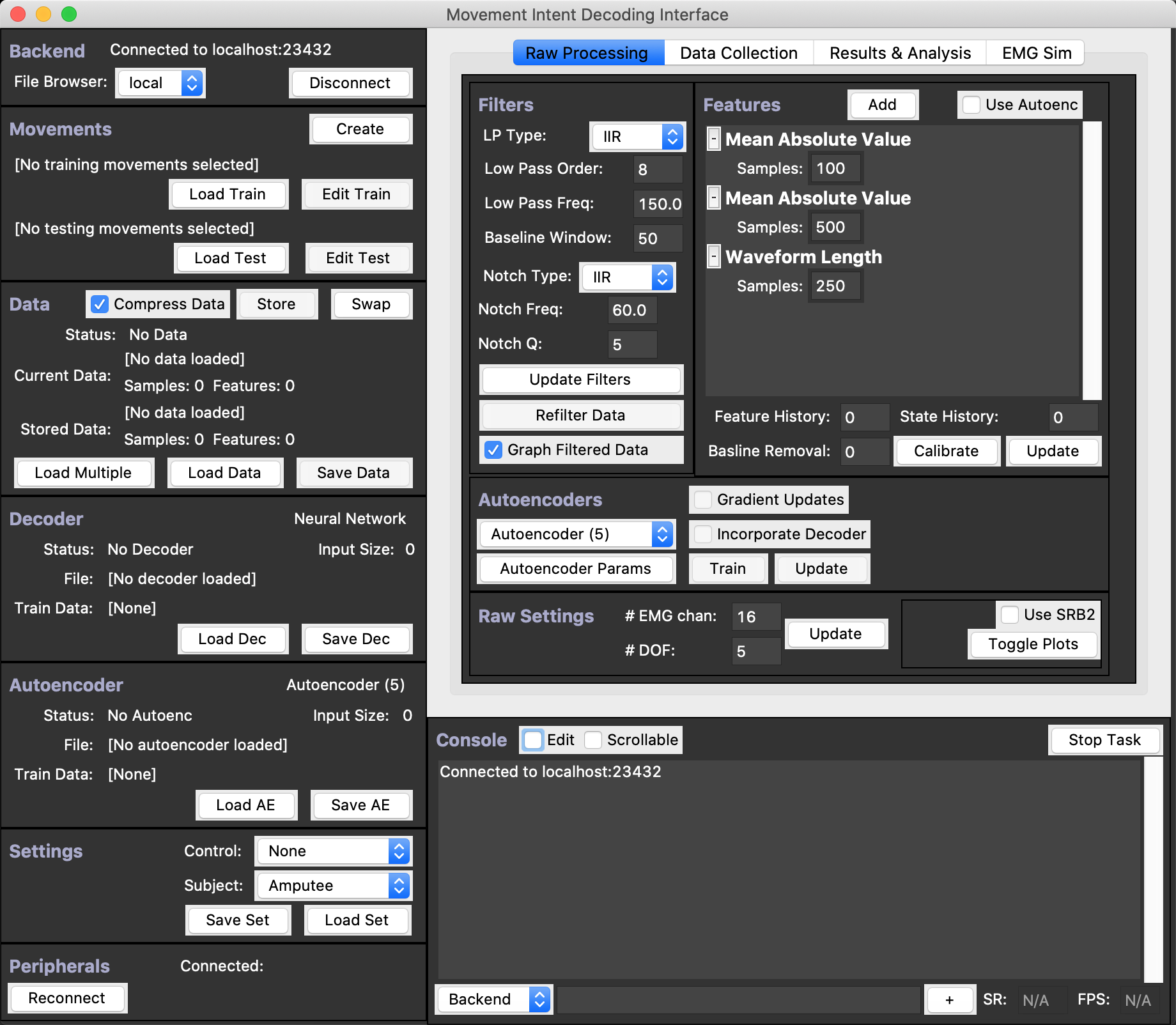

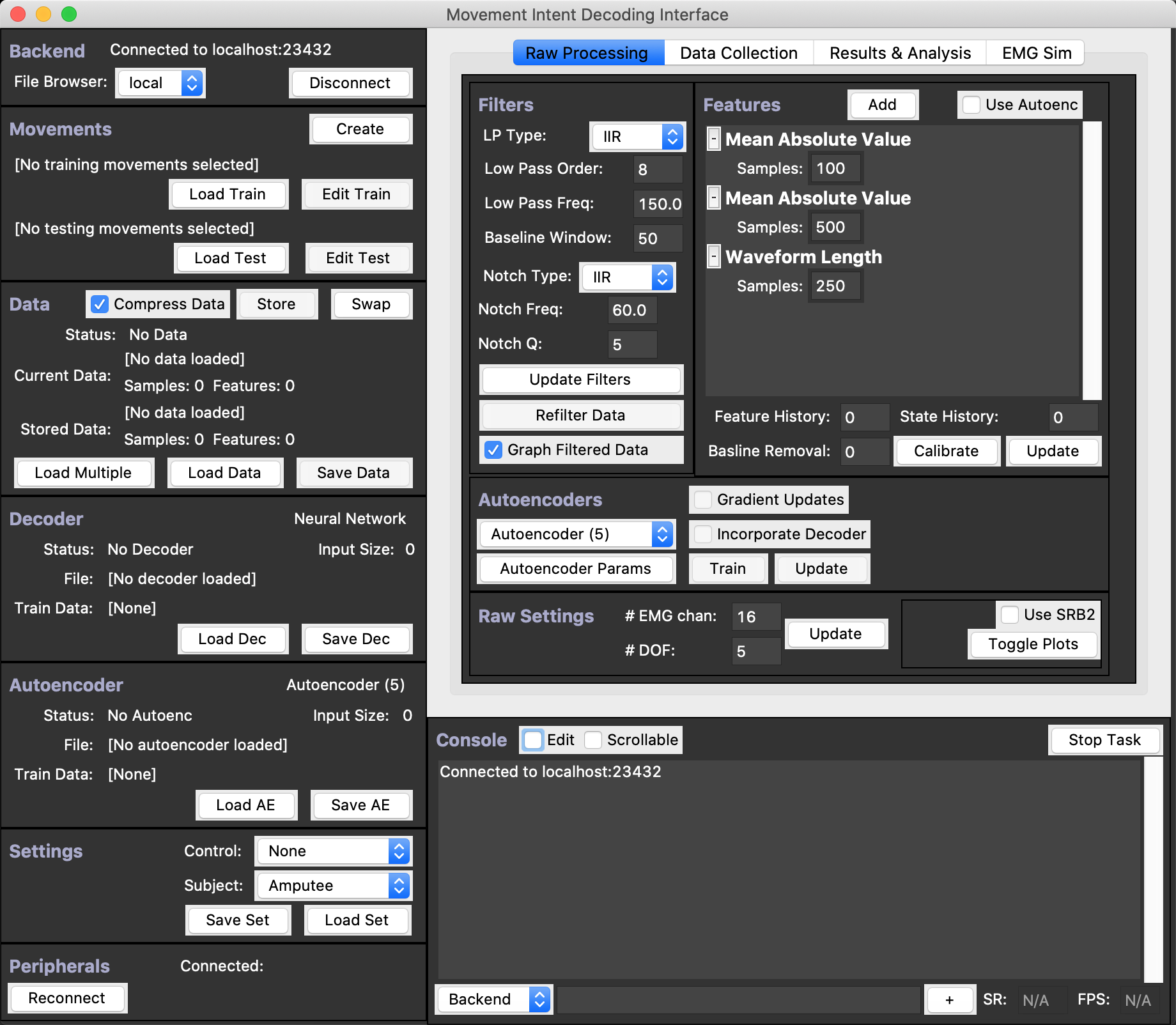

## The MINDecoding Interface

This is the Movement Decoding Interface!

It runs on Window 10, OSX, or Linux.

With this software, you can

* Collect raw EMG or EEG (or other related biological signals) from actual subjects;

* Or simulate EMG signals if you prefer;

* Filter those raw signals, and compute different sets of configurable Features (e.g. MAV) on the filtered data;

* Train a MINDecoder with those Features, which will allow a subject to actually control a prosthetic hand (either simulated or real) with their mind;

* Test trained MINDecoders either online with real subjects or offline with existing datasets;

* Configure and compute a set of Results metrics on your test datasets;

* Plot these metrics in a variety of formats for both a single file or groups of them;

* Export result to csv;

* Save/Load all data and configurations to/from either readable `json` files or compressed `pickle` files;

* And so much more!

For more details regarding all these capabilities, check out {doc}`capabilities/index`.

And for more details regarding the Interface and how to use it, checkout out the detailed walkthrough {doc}`here ` or some example workflows in {doc}`here `.

## Extra Windows

The MINDecoding Interface software includes a few other windows as well.

For example, the Raw Plots Window, shown below, allows you to visualize the raw or filtered biological input signals (and their frequency spectrums) while collecting data or even while replaying old data.

For more details check out {doc}`plotters/index`.

This is the Movement Decoding Interface!

It runs on Window 10, OSX, or Linux.

With this software, you can

* Collect raw EMG or EEG (or other related biological signals) from actual subjects;

* Or simulate EMG signals if you prefer;

* Filter those raw signals, and compute different sets of configurable Features (e.g. MAV) on the filtered data;

* Train a MINDecoder with those Features, which will allow a subject to actually control a prosthetic hand (either simulated or real) with their mind;

* Test trained MINDecoders either online with real subjects or offline with existing datasets;

* Configure and compute a set of Results metrics on your test datasets;

* Plot these metrics in a variety of formats for both a single file or groups of them;

* Export result to csv;

* Save/Load all data and configurations to/from either readable `json` files or compressed `pickle` files;

* And so much more!

For more details regarding all these capabilities, check out {doc}`capabilities/index`.

And for more details regarding the Interface and how to use it, checkout out the detailed walkthrough {doc}`here ` or some example workflows in {doc}`here `.

## Extra Windows

The MINDecoding Interface software includes a few other windows as well.

For example, the Raw Plots Window, shown below, allows you to visualize the raw or filtered biological input signals (and their frequency spectrums) while collecting data or even while replaying old data.

For more details check out {doc}`plotters/index`.

## Peripherals

A variety of peripherals can be connected to the MINDecoding Interface, whether it be the OpenBCI Cython, Daisy, & WiFi Boards for recording EMG & EEG signals, or the MuJoCo Physics Simulator, or an actual robotic hand!

The full list of current peripherals can be found {doc}`peripherals`.

More peripherals are being added all the time, and feel free to contact the developers if you'd like support for another peripheral, or check out the Developer Documentation for information on how to code a new Peripheral yourself.

## Repository Structure

Here is the structure of the MINDecoding-Interface repository:

```

MINDecoding-Interface/

├── data/

│ ├── movements/

│ ├── saved_data/

│ ├── saved_decoders/

│ ├── scripts/

│ │ ├── compress.py

│ │ ├── decompress.py

│ │ └── utah_client.py

│ ├── settings/

│ │ └── defaults.json

│ ├── shortcuts/

│ │ ├── default_shortcuts.json

│ │ └── mac_shortcuts.json

├── peripherals/

│ ├── glove_to_servo/

│ ├── mujoco/

│ └── read_sensor_data/

├── src/

│ └── main.py

└── README.md

```

The `data/` folder contains various subfolders for containing data used by the program.

Some of these folders already contain a few useful files, such as the default settings and shortcuts, as well as the `compress.py` and `decompress.py` scripts that are useful for transforming our data files between `json` (slow and large but readable), `pickle` (fast and compressed), and `gzip` (slow but very compressed).

The `peripherals/` directory contains two arduino programs used to control some of the peripherals used by this project, as well as the free MuJoCo software program for Windows.

Checkout out {doc}`peripherals` for more information.

Lastly, the `src` directory contains all the python source code - most importantly `main.py` which runs the MINDecoding Interface.

## Next Steps

* To install and use the MINDecoding Interface: {doc}`installation_and_usage`

* For more details regarding all these capabilities: {doc}`capabilities/index`

* And for more details regarding the Interface and how to use it: {doc}`gui/index`

* For details regarding all the supported Peripherals: {doc}`peripherals`

* For some example workflows using the Interface: {doc}`workflows/index`

* And for detailed listings of all the possible settings used in the MINDecoding Interface: {doc}`settings/index`

## Peripherals

A variety of peripherals can be connected to the MINDecoding Interface, whether it be the OpenBCI Cython, Daisy, & WiFi Boards for recording EMG & EEG signals, or the MuJoCo Physics Simulator, or an actual robotic hand!

The full list of current peripherals can be found {doc}`peripherals`.

More peripherals are being added all the time, and feel free to contact the developers if you'd like support for another peripheral, or check out the Developer Documentation for information on how to code a new Peripheral yourself.

## Repository Structure

Here is the structure of the MINDecoding-Interface repository:

```

MINDecoding-Interface/

├── data/

│ ├── movements/

│ ├── saved_data/

│ ├── saved_decoders/

│ ├── scripts/

│ │ ├── compress.py

│ │ ├── decompress.py

│ │ └── utah_client.py

│ ├── settings/

│ │ └── defaults.json

│ ├── shortcuts/

│ │ ├── default_shortcuts.json

│ │ └── mac_shortcuts.json

├── peripherals/

│ ├── glove_to_servo/

│ ├── mujoco/

│ └── read_sensor_data/

├── src/

│ └── main.py

└── README.md

```

The `data/` folder contains various subfolders for containing data used by the program.

Some of these folders already contain a few useful files, such as the default settings and shortcuts, as well as the `compress.py` and `decompress.py` scripts that are useful for transforming our data files between `json` (slow and large but readable), `pickle` (fast and compressed), and `gzip` (slow but very compressed).

The `peripherals/` directory contains two arduino programs used to control some of the peripherals used by this project, as well as the free MuJoCo software program for Windows.

Checkout out {doc}`peripherals` for more information.

Lastly, the `src` directory contains all the python source code - most importantly `main.py` which runs the MINDecoding Interface.

## Next Steps

* To install and use the MINDecoding Interface: {doc}`installation_and_usage`

* For more details regarding all these capabilities: {doc}`capabilities/index`

* And for more details regarding the Interface and how to use it: {doc}`gui/index`

* For details regarding all the supported Peripherals: {doc}`peripherals`

* For some example workflows using the Interface: {doc}`workflows/index`

* And for detailed listings of all the possible settings used in the MINDecoding Interface: {doc}`settings/index`

## Peripherals

A variety of peripherals can be connected to the MINDecoding Interface, whether it be the OpenBCI Cython, Daisy, & WiFi Boards for recording EMG & EEG signals, or the MuJoCo Physics Simulator, or an actual robotic hand!

The full list of current peripherals can be found {doc}`peripherals`.

More peripherals are being added all the time, and feel free to contact the developers if you'd like support for another peripheral, or check out the Developer Documentation for information on how to code a new Peripheral yourself.

## Repository Structure

Here is the structure of the MINDecoding-Interface repository:

```

MINDecoding-Interface/

├── data/

│ ├── movements/

│ ├── saved_data/

│ ├── saved_decoders/

│ ├── scripts/

│ │ ├── compress.py

│ │ ├── decompress.py

│ │ └── utah_client.py

│ ├── settings/

│ │ └── defaults.json

│ ├── shortcuts/

│ │ ├── default_shortcuts.json

│ │ └── mac_shortcuts.json

├── peripherals/

│ ├── glove_to_servo/

│ ├── mujoco/

│ └── read_sensor_data/

├── src/

│ └── main.py

└── README.md

```

The `data/` folder contains various subfolders for containing data used by the program.

Some of these folders already contain a few useful files, such as the default settings and shortcuts, as well as the `compress.py` and `decompress.py` scripts that are useful for transforming our data files between `json` (slow and large but readable), `pickle` (fast and compressed), and `gzip` (slow but very compressed).

The `peripherals/` directory contains two arduino programs used to control some of the peripherals used by this project, as well as the free MuJoCo software program for Windows.

Checkout out {doc}`peripherals` for more information.

Lastly, the `src` directory contains all the python source code - most importantly `main.py` which runs the MINDecoding Interface.

## Next Steps

* To install and use the MINDecoding Interface: {doc}`installation_and_usage`

* For more details regarding all these capabilities: {doc}`capabilities/index`

* And for more details regarding the Interface and how to use it: {doc}`gui/index`

* For details regarding all the supported Peripherals: {doc}`peripherals`

* For some example workflows using the Interface: {doc}`workflows/index`

* And for detailed listings of all the possible settings used in the MINDecoding Interface: {doc}`settings/index`

## Peripherals

A variety of peripherals can be connected to the MINDecoding Interface, whether it be the OpenBCI Cython, Daisy, & WiFi Boards for recording EMG & EEG signals, or the MuJoCo Physics Simulator, or an actual robotic hand!

The full list of current peripherals can be found {doc}`peripherals`.

More peripherals are being added all the time, and feel free to contact the developers if you'd like support for another peripheral, or check out the Developer Documentation for information on how to code a new Peripheral yourself.

## Repository Structure

Here is the structure of the MINDecoding-Interface repository:

```

MINDecoding-Interface/

├── data/

│ ├── movements/

│ ├── saved_data/

│ ├── saved_decoders/

│ ├── scripts/

│ │ ├── compress.py

│ │ ├── decompress.py

│ │ └── utah_client.py

│ ├── settings/

│ │ └── defaults.json

│ ├── shortcuts/

│ │ ├── default_shortcuts.json

│ │ └── mac_shortcuts.json

├── peripherals/

│ ├── glove_to_servo/

│ ├── mujoco/

│ └── read_sensor_data/

├── src/

│ └── main.py

└── README.md

```

The `data/` folder contains various subfolders for containing data used by the program.

Some of these folders already contain a few useful files, such as the default settings and shortcuts, as well as the `compress.py` and `decompress.py` scripts that are useful for transforming our data files between `json` (slow and large but readable), `pickle` (fast and compressed), and `gzip` (slow but very compressed).

The `peripherals/` directory contains two arduino programs used to control some of the peripherals used by this project, as well as the free MuJoCo software program for Windows.

Checkout out {doc}`peripherals` for more information.

Lastly, the `src` directory contains all the python source code - most importantly `main.py` which runs the MINDecoding Interface.

## Next Steps

* To install and use the MINDecoding Interface: {doc}`installation_and_usage`

* For more details regarding all these capabilities: {doc}`capabilities/index`

* And for more details regarding the Interface and how to use it: {doc}`gui/index`

* For details regarding all the supported Peripherals: {doc}`peripherals`

* For some example workflows using the Interface: {doc}`workflows/index`

* And for detailed listings of all the possible settings used in the MINDecoding Interface: {doc}`settings/index`

This is the Movement Decoding Interface!

It runs on Window 10, OSX, or Linux.

With this software, you can

* Collect raw EMG or EEG (or other related biological signals) from actual subjects;

* Or simulate EMG signals if you prefer;

* Filter those raw signals, and compute different sets of configurable Features (e.g. MAV) on the filtered data;

* Train a MINDecoder with those Features, which will allow a subject to actually control a prosthetic hand (either simulated or real) with their mind;

* Test trained MINDecoders either online with real subjects or offline with existing datasets;

* Configure and compute a set of Results metrics on your test datasets;

* Plot these metrics in a variety of formats for both a single file or groups of them;

* Export result to csv;

* Save/Load all data and configurations to/from either readable `json` files or compressed `pickle` files;

* And so much more!

For more details regarding all these capabilities, check out {doc}`capabilities/index`.

And for more details regarding the Interface and how to use it, checkout out the detailed walkthrough {doc}`here

This is the Movement Decoding Interface!

It runs on Window 10, OSX, or Linux.

With this software, you can

* Collect raw EMG or EEG (or other related biological signals) from actual subjects;

* Or simulate EMG signals if you prefer;

* Filter those raw signals, and compute different sets of configurable Features (e.g. MAV) on the filtered data;

* Train a MINDecoder with those Features, which will allow a subject to actually control a prosthetic hand (either simulated or real) with their mind;

* Test trained MINDecoders either online with real subjects or offline with existing datasets;

* Configure and compute a set of Results metrics on your test datasets;

* Plot these metrics in a variety of formats for both a single file or groups of them;

* Export result to csv;

* Save/Load all data and configurations to/from either readable `json` files or compressed `pickle` files;

* And so much more!

For more details regarding all these capabilities, check out {doc}`capabilities/index`.

And for more details regarding the Interface and how to use it, checkout out the detailed walkthrough {doc}`here